Real-Time Streaming Data Analysis Project Wins SC19 SCinet Challenge

December 04, 2019 | Mary Bass

Two essential elements of the cyberinfrastructure required to advance modern science are networking and high performance computing resources. That’s what SCinet set out to demonstrate at SC19 in Denver, with their first-ever SCinet Technology Challenge.

And we’re proud that Globus played a role in the winning project!

Figure 1: Zhengchun Liu (2nd from left) and Tekin Bicer (3rd from left) accept award, while Ian Foster (far left) and Mike Papka (far right) look on

About the Challenge

The inaugural event featured experiments highlighting closely coupled operations of sophisticated networking, computing and storage infrastructures, with the goal of demonstrating how both HPC and networking play a key role in advancing modern data-driven scientific applications.

The event culminated in live demonstrations before a jury of academic and industry thought leaders from organizations like Oak Ridge National Lab, TACC and Lawrence Berkeley National Lab.

And the Winner Is…

The winning entry, “Real-Time Analysis of Streaming Synchrotron Data,” was presented by project lead Tekin Bicer on behalf of a team from Argonne National Laboratory, Northwestern University, Starlight, Northern Illinois University, and the University of Chicago. Their project, which involved streaming light source data from the SC19 show floor to Argonne’s Leadership Computing Facility (ALCF) outside Chicago, “received the top recognition for an exemplary blend of networking, computing, and storage” according to judges.

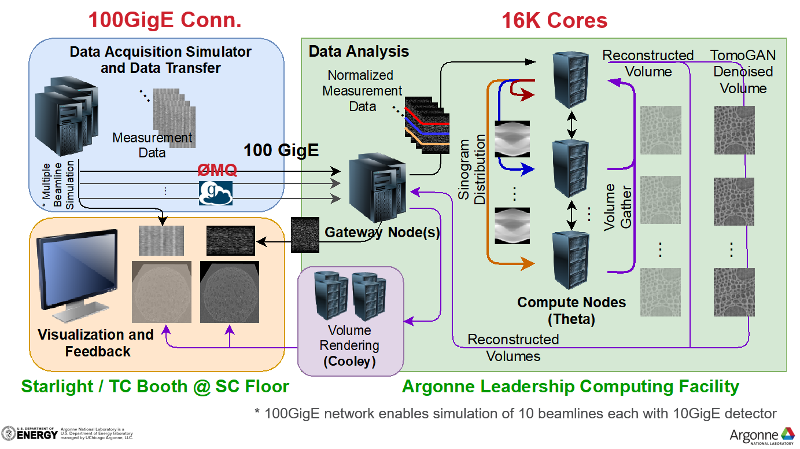

Figure 2: Demo setup for "Real-Time Analysis of Streaming Synchrotron Data" project

In this project, team members in Denver simulated data acquisition of 10 light source beamlines, then streamed the data to ALCF at close to 100Gbps for analysis and storage. The analysis was performed in real time on 256 nodes of Theta (ALCF’s supercomputer introduced in 2016 as an interim system for Aurora), and included iterative reconstruction of 3D volume from 2D tomographic images and neural network GAN-based de-noising to improve image quality. The team then streamed the results data to ALCF’s Cooley cluster for rendering, and finally streamed the rendered 3D images back to the show floor in Denver for visualization.

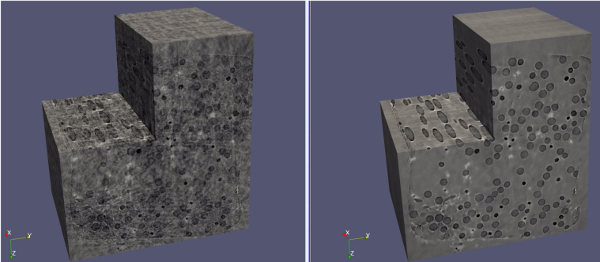

Figure 3: Visualization of real-time 3D volume reconstructions (left figure shows original reconstruction; right figure shows the same volume after denoising using TomoGAN)

Zhengchun Liu, a project team member from Argonne, explains that “We used zeroMQ to stream one beamline's data to Theta for analysis. Since the 256 Theta compute nodes we used for the demo could process only one beamline's data, we used Globus to send the data from the other 9 beamlines to ALCF separately, to save for future processing."

You can view slides from the presentation here.

According to Rajkumar Kettimuthu, one of the project leads, “there were some scary moments just hours before the challenge with the failure of the 100G link at Argonne. We’re grateful to the ALCF operations and Argonne networking folks who worked hard and fixed it in a timely manner (that link is important for production projects too, not just our demo!). They identified the source of the problem just 1 hour before the demo and fixed it 20 minutes before the demo!”

Project Significance

Each of the challenge entries addressed important use cases such as real-time weather forecasting and IoT sensing. For the winning project, the team chose to focus on the challenge of streaming instrument data for analysis and rendering.

Instruments like cryo-EM systems and accelerator-based light sources are crucial tools for addressing grand challenge problems in life sciences, energy, climate change, and information technology. When working with these systems, timely data analysis and feedback are crucial – yet there is often a long delay between data collection and analysis, which prevents real-time decision making and thus compromises researchers’ productivity and ability to make timely insights.

These problems will become more serious as next-generation photon sources (such as the Argonne Advanced Photon Source upgrade) come online, since beam intensity – and resulting data sizes and computational demand – will increase by orders of magnitude. Therefore, investigations into technologies and processes that can streamline such work is essential to preparing for the demands of future research.

Congratulations to Tekin Bicer, Zhengchun Liu and the entire winning team for tirelessly working on this challenge for several weeks – and to the ALCF operations team for their outstanding support! Congrats too to the other exceptional entries from UNC Chapel Hill, USC/ISI, UMass Amherst, and Rutgers University; as well as University of Utah Center for HPC, Murray School District, and the Utah Education and Telehealth Network.